The German Aerospace Center (DLR) uses cutting-edge technology to advance research and innovation – for a sustainable and interconnected future in aerospace, energy and mobility.

With HIDA's mobility programs, talented data scientists can work on cutting-edge space research at DLR and help develop innovative energy and mobility concepts.

Apply now!

The programs

Get to know DLR with HIDA

The DLR is part of the Helmholtz Association.

Data science talents can conduct research at the center with the following programs.

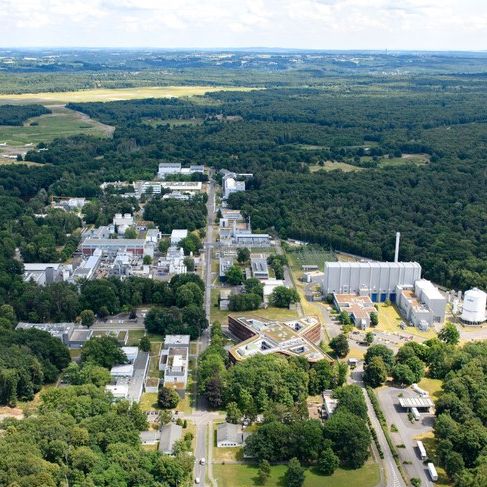

The German Aerospace Center (DLR) conducts cutting-edge space research and develops innovative energy and mobility concepts. The Helmholtz Center focuses on questions of security and digitization. With around 10,000 employees at over 30 locations, the DLR investigates space, develops low-emission aircraft, tests new engines and sends satellites for Earth observation into space. In addition, the German Space Agency is based at the DLR and manages national and European space programs.

Main research areas:

- Aeronautical engineering and sustainable flight concepts

- Space research and satellite technology

- Energy and climate research

- Automated and connected driving

- Artificial intelligence and digital technologies

- Security and defense

- Quantum computing and high-performance computing

The sites

The sites

DLR is active at several research sites:

- Cologne (main location)

- Berlin

- Braunschweig

- Bremen

- Hamburg

- Göttingen

- Stuttgart

- Augsburg

- Jena

- Neustrelitz

- Oberpfaffenhofen

Further branches in:

- Aachen

- Bonn

- Cottbus

- Dresden

- Geesthacht

- Hannover

- Lampoldshausen

- Oldenburg

- Rheinbach

- Sankt Augustin

- Stade

- Trauen

- Ulm

- Weilheim

- Zittau

DLR's expertise in Data Science and AI

DLR has outstanding expertise in the use of data science and artificial intelligence to solve complex technological challenges. In interdisciplinary teams, scientists from engineering, computer science and natural sciences work together to develop innovative applications.

By using powerful AI models, big data analyses and simulations, DLR is making a significant contribution to the further development of aerospace technologies and sustainable mobility concepts.

- Autonomous flight and flight control systems

- Satellite-based Earth observation and data analysis

- Optimization of traffic flows with AI

- Simulation models for new mobility concepts

- Machine learning for space applications

With over 10,000 employees, the DLR conducts research into cutting-edge technologies for aerospace, energy and transportation. In numerous institutes, researchers are developing AI-supported solutions for sustainable innovations.

Notes on application

Notes on application

Helmholtz hosts

Meet some potential hosts at various Helmholtz centers and learn more about their respective data science-based research by clicking on the cards.

Please note: Please contact your potential supervisor in advance by email to propose and discuss a research project. Only submit your application after this clarification.

If you have any questions, please send an e-mail to: hida@helmholtz.de

Would you like to become a Helmholtz host yourself and are you looking for support for your research project? Then please also contact the above e-mail address.

Apply now!

The hosts at DLR

Meet some of DLR's potential hosts and learn more about their respective research based on data science.

Before contacting the potential hosts, please read the application instructions.

Ronny Hänsch

SAR-Technology

Contacts

Short summary of your group's research: The SAR Technology department addresses everything from building up the sensor (currently the multi-frequency fully polarimetric F-SAR), planning and executing measurement campaigns, SAR processing (i.e. focusing, geocoding, image enhancement), up to the analysis of the final image product. The Machine Learning team mainly focuses on the last part, i.e. using machine learning to derive higher level information (e.g. semantic maps, geo-/bio-physical parameters) from the SAR images. Topics of relevance are single-image superresolution, denoising (despeckling), ensemble learning, deep learning, image synthesis, semantic segmentation, semi-/weakly-/self-supervised learning, XAI, out-of-distribution/anomaly detection, etc.

What infrastructure, programs and tools are used in your group? Programming is mostly done in python or C++; deep learning developments either in PyTorch or Tensorflow. We do have access to GPU servers.

What could a participant of the HIDA Trainee Network learn in your group? How could he or she support you in your group? We offer insights in all aspects of SAR, including hardware, focussing, processing, and analysis, combined with a profound knowledge in machine/deep learning, and applied to interesting and highly relevant applications (e.g. forest monitoring, urban growth, development of glaciers, etc.). We are looking for people with knowledge in machine/deep learning and/or image processing to apply their skills to a very exciting and powerful type of image data, i.e. SAR, or to use machine learning and SAR to solve relevant tasks in emerging applications.

Kristin Rammelkamp

Terahertz and Laser Spectroscopy

Contacts

Short summary of your group's research: In the DLR junior research group “Machine learning for planetary in-situ spectroscopic data”, we investigate data measured in the laboratory but also on Mars by instruments like ChemCam (NASA’s Mars Science Laboratory). We train and evaluate supervised algorithms for the prediction of elemental abundances in rocks and soils, and for the classification of mineralogical classes. Additionally, we use unsupervised methods for pattern recognition in large spectroscopic datasets and aim also for physics informed machine learning based simulations of spectra from geological targets.

What infrastructure, programs and tools are used in your group? For the machine learning part of our research, we work with frameworks like PyTorch, Sklearn etc provided in python. We have laboratory instruments that can be used to measure spectroscopic data, also in simulated martian and vacuum atmospheric conditions. The spectroscopic techniques we are mainly working with are laser-induced breakdown spectroscopy (LIBS) and Raman spectroscopy.

What could a participant of the HIDA Trainee Network learn in your group? How could he or she support you in your group? You can learn several aspects in our group starting with the basics of the spectroscopic methods and the nature of the data, especially with regard to planetary in-situ exploration. Regarding machine learning, you can learn how to optimize models trained with spectroscopic data for different purposes, such as regression and classification. Furthermore, you can benefit from our experience in real planetary mission involvements. We are looking forward to learning from your machine learning experience for other applications and how this could be adapted to spectroscopic data. In particular, we are interested in explainability of machine learning models in order to better understand the predictions and performance of them and in ways to include physical knowledge in the training process.

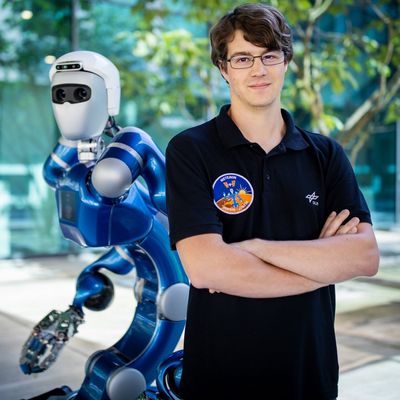

Daniel Leidner

Autonomy and Teleoperation

Contacts

Short summary of your group's research: The Institute of Robotics and Mechatronics, Department of Autonomy and Teleoperation explores all aspects of robot autonomy. This includes autonomous operation with AI-based approaches to task and motion planning, as well as teleoperation modalities for remote operation under human supervision with intuitive interfaces. All of this is embedded in fault-tolerant autonomy architectures that enable resilient decision making, inspection, monitoring, and error handling. The design of software components for embedded and distributed computing platforms enables the use of these methods for space applications under hard real-time conditions.

What infrastructure, programs and tools are used in your group? To increase the autonomy of robotic systems we investigate techniques to generate robot programs from logical task descriptions (e.g. PDDL). The robot Rollin Justin (see picture) is one of several demonstration platforms for these tasks. The software stack of the robot is based on different modules developed in Python, C++, and Simulink. We develop model-based techniques for decision making leveraging real-world robot telemetry as well as semantic information inferred through physics-simulations (e.g. Gazebo). To control the robot (e.g. from aboard the ISS) intuitive control modalities are developed such as shared control and supervised autonomy.

What could a participant of the HIDA Trainee Network learn in your group? How could he or she support you in your group? We offer visiting researchers the opportunity to participate in the development of software for some of the most advanced robots in the world. We utilize advanced programming techniques for use under real-time and non-real-time conditions. This includes integrating complex control strategies for compliant robots with AI-based reasoning methods, developing physics-based reasoning for error handling, and developing multi-modal robot interfaces in the context of astronaut-robot collaboration. Visiting researchers will learn to apply an agile, concurrent software engineering process to develop software components for space-qualified robots.

Andreas Gerndt

Visual Computing and Engineering

Contacts

Short summary of your group's research: The mission of the DLR Institute for Software Technology is research and development in software engineering technologies, and the incorporation of these technologies into DLR software projects. The department of Software for Space Systems and Interactive Visualization investigates and develops methods for robust and reusable software solutions for the design and operation of space missions as well as for interactive visualization applications.

What infrastructure, programs and tools are used in your group? Besides modern computer systems, we are using well-equiped laboratories for flight software integration into onboard sytems, for the investigation of immersive software in virtual environments, and for evaluation of software solutions into mixed and extended reality. This includes large immersive virtual reality powerwall displays, multi-pipe multi-touch display walls, and fully embedded systems development equipement. Additionally, we are operating a software laboratory for highly agile joint software development sessions. All systems have access to institute server for high-performance computing, cluster solution development, and high-end visualization approaches.

What could a participant of the HIDA Trainee Network learn in your group? How could he or she support you in your group? We are looking for excellent scientists for all activities in the research groups of the department: robust and resilient onboard software, formal verification of spacecraft design, digital transformation in the space domain, in-situ data processing and visualization on high-performance computers, topology-based data analysis for large-scale datasets, visual data analytics, virtual reality, mixed reality, extended reality, human factors and user studies, applications in DLR's domain like space, aeronautics, transportation, climate change, and more.

Annette Hammer

Networked Energy Systems, Energy System analysis

Contacts

Three-sentence summary of your group's research

The Energy Meteorology Group has a long experience in solar forecasting, pv-modelling and satellite retrieval. We operate a sensor and camera network in North West Germany.

What infrastructure, programs and tools are used in your group?

python and pytorch

What could a guest researcher learn in your group? How could he or she support you in your group?

You will use meteorological data, camera images, satellite images and photovoltic power measurements. You will learn, how we model PV power from these input data. You will support us in forecasting PV-power-production for the next minutes and hours form these input data.

Anne Papenfuß

Human Factors Department

Contacts

Three-sentence summary of your group's research: The Human Factors department supports the development of advanced concepts and assistance systems for pilots, air traffic controllers and control center personnel in terms of human-centered automation. It is cross-sectionally manifested in the Institute of Flight Guidance and supports the specialist departments in the early concept phases. An interdisciplinary team of engineers, psychologists and computer scientists plans and manages validations with operators in real-time simulation, field trials and flight tests. In general, it is important to take equal account of improving the individual human-machine cooperation and the cooperation between the on-board and ground-based systems and the people involved in them.

What infrastructure, programs and tools are used in your group? - Virtual environments, High-fidelity simulators of Air Traffic Control working positions, aircraft cockpits, VR-glasses

- Physiological measurement tools like EEG, fNIRS, EKG

- Eye tracking

- Speech recognition

What could a guest researcher learn in your group? How could he or she support you in your group? - A participant could learn about data representing human factors in automated systems, as well as how data are collected in controlled experimental designs and used for informed decisions about system design.

- A participant can furthermore learn about research topics on human-machine interaction and cooperation in the field of aviation.

- Partly automated data processing/data analysis like detection of patterns and anomalies in heterogenous data (e.g. by AI)

- Development of analysis and visualization concepts

Claudia Stern

Klinische Luft- und Raumfahrtmedizin

Contacts

Three-sentence summary of your group's research: Long duration human spaceflight missions create medical support challenges for eye changes, which can occur in nearly two-thirds of astronauts. To address these challenges, we are developing artificial intelligence applications to support crew members in monitoring their eyes. These applications have the potential to be used for crew medical support aboard the International Space Station, and beyond.

What infrastructure, programs and tools are used in your group? For the machine learning component of our research, Python, convolutional neural networks, Tensorflow, GPU servers, and computer vision tools are used to conduct our analyses. To collect the raw image and video data used in our analysis, we use ophthalmology imaging tools (e.g., fundoscopy, optical coherence tomography (OCT), etc.) commonly used in clinical practice worldwide. You would require access to a development environment (e.g., VSCode, Pycharm, etc.), understanding of and adherence to data security and ethics standards, and a modern smartphone/tablet.

What could a guest researcher learn in your group? How could he or she support you in your group? In our group, you could learn about the eye changes astronauts experience during long duration spaceflight, especially with regard to the retina. You could advance your machine learning skills to optimize regression, classification, and object detection models trained with our image and video data. In particular, we are interested in and open to new ways to improve the predictions and performance of our networks and models. By joining our group, you could benefit from our experience in aerospace medicine, human spaceflight, and International Space Station research, and we would benefit from your expertise in computer vision for human medical data. We would look forward to the possibility of working together.

Peter Jung

Real-Time Data Processing

Contacts

Three-sentence summary of your group's research: The new SensorAI group in the OS-EDP department will focus on challenging data science and sensing problems related to sensors and optical instruments and imaging devices. This includes the design, development and evaluation of data aggregation methods, calibration and recovery algorithms, and high-dimensional data analysis. Recent theoretical advances in sensing (compressed sensing, low-rank recovery and super-resolution) and AI methods (from deep learning to neural-augmented/unfolding algorithms, PINNs and more recent AI architectures) will be used to address inverse problems in signal processing and physics.

What could a guest researcher learn in your group? How could he or she support you in your group?

What could a guest researcher learn in your group? How could he or she support you in your group?:

Guest researchers are invited to cooperate on topics with the SensorAI group in the EDP department. This includes (but is not limited to):

1) AI for solving inverse problems in signal processing, physics and data science.

2) deep learning, neurally-augmented/unfolding algorithms

3) uncertainty quantification

4) Quantum computing

Andrés Camero Unzueta

EO Data Science

Contacts

Three-sentence summary of your group's research: The Helmholtz AI consultant team @ DLR provides expertise from Earth observation, robotics and computer vision and an HPC/HPDA support unit.

The team covers a comprehensive package of AI-related services. It is supposed to support both projects within LRV and cooperative projects with other Helmholtz centers. On the other hand, the EO Data Science Department develop AI methods for Earth observation data to tackle societal grand challenges.

What infrastructure, programs and tools are used in your group? Coding is mainly done in Python and C/C++, using the most popular optimization and ML/DL frameworks. We have access to GPU servers.

What could a guest researcher learn in your group? How could he or she support you in your group? We offer a broad range of AI expertise, with a strong focus on Earth observation applications. We are looking for people with knowledge in machine learning, optimization and/or image processing to apply their skills to tackle societal grand challenges using Earth observation data.

Eva-Maria Elmenhorst

Sleep and Human Factors Research

Contacts

Three-sentence summary of your group's research: We study the homeostatic and circadian processes that regulate the quality, duration, and timing of sleep, as well as cognitive performance. Data are collected both in the laboratory and in the operational environment, e.g. the cockpit. We study factors like acute and chronic sleep loss, workload, stimulants, circadian misalignment, and inter-individual differences in the vulnerability to sleep loss. We are also interested in cognitive and operational performance in extreme environments, e.g. space, and the influence of a multitude of stressors, such as hypoxia, hypercapnia, simulated microgravity (head down tilt bedrest), or microgravity during parabola flights.

What infrastructure, programs and tools are used in your group? During our studies, we gathered a rich multitude of data under extreme conditions (fatigue, hypoxia, hypercapnia, microgravity): behavioral responses in cognitive tasks and simulations, eye tracking data, physiological measurements (polysomnography, ECG, EEG), actigraphy, fMRI and PET.

What could a guest researcher learn in your group? How could he or she support you in your group? Guest researchers can learn sleep measuring techniques (including polysomnography), circadian analysis techniques (including mathematical modeling of circadian and sleep-wake regulation), eye-tracking techniques, and be part of large laboratory (e.g., bedrest studies) and field studies (e.g., assessing fatigue and cognitive performance in pilots). We welcome expertise in bioengineering, psychology, medicine, biology, and related disciplines, with a special emphasis on skills in advanced statistical analyses and machine learning.

Susanne Bartels

Sleep and Human Factors Research

Contacts

Three-sentence summary of your group's research: We investigate how air, rail and road traffic noise affect sleep, cognitive performance and annoyance. We conduct both laboratory studies as well as field studies in residents affected by transportation noise and derive exposure-response relationships for awakening probabilities and annoyance that are used to develop protection concepts for the affected population. Noise effects are being studied in healthy adults as well as in vulnerable individuals, e.g. children and elderly people.

What infrastructure, programs and tools are used in your group? To collect data on sleep quality and cardiovascular parameter, we apply polysomnography incl. EEG, ECG, pulse oximetry and actigraphy. Noise exposure data are obtained via acoustic measurements (e.g. by means of class-1 sound level meters). Survey data are obtained via postal or online surveys using, for instance, LimeSurvey. For data management and analysis, we use e.g. SOMNOmedics DOMINO, Matlab, R-Studio and SPSS as well as self-programmed and customized tools based on Matlab and LabVIEW.

What could a guest researcher learn in your group? How could he or she support you in your group? By joining our group, you could learn about the manifold effects of environmental noise on human health and their underlying mechanisms. You would benefit from our expertise in data collection in controlled experimental designs in the laboratory and the field as well as in surveys. Furthermore, you could increase your knowledge in sleep measurement techniques, e.g. polysomnography, and apply your skills (incl. machine learning skills) in the analysis of cardiovascular reactions to noise (e.g. regarding heart rate variability, pulse transit time) and/or noise-induced reactions in sleep (e.g. vegetative-motoric reactions, identification of EEG arousals).

Expertise in bioengineering, psychology, medicine, biology, and related disciplines, with a special emphasis on skills in advanced statistical analyses, data visualization and machine learning are very welcome. We are looking forward to an open exchange of ideas and methods for the in-depth investigation of noise impacts on humans.